Hypothesis: AI arms race fixates on scaling frontier models to trillion-parameter behemoths but overlooks the power of the “two-stack firm” architecture. Pairing these general giants with nimble small language models (SLMs) will unlock client-safe workflow efficiencies, lower delivery costs, and derisk operations by transitioning from hyper-scalers to on-premise innovators.

The Two-Stack Firm: Pairing General Models with Small Language Models for Client-Safe Speed

Introduction

By late 2025, the AI arms race feels like an overhyped spectacle; all sizzle and no steak. What started as single-prompt chats, like asking early ChatGPT to draft an email or a cute ripoff of your poet, has evolved into agent-like models such as OpenAI’s o3-style systems that infer intent, research autonomously, and chain prompts into multi-step tasks. The productivity leap is undeniable, but it slams into hard limits: token caps, ballooning costs, and privacy concerns with every third-party API call leaving firms wary about shipping client-sensitive data offsite. All the while, seeing less wow factor with incremental costly adoption. Take the GPT-5 rollout as example. We were all blown away by the image generation abilities of GPT-4o, so waited with great anticipation for GPT-5, only to feel let down when it barely moved MMLU from 88 percent to 91 percent. Incremental value wow-factor gains are diminishing, while API call fees (particularly for thinking models) are skyrocketing. Anyone paying attention knows this anecdotally. New models flex on esoteric benchmarks that simulate impossibly complex math and physics problems that the average user will never see in the wild, rather than highlight newly-unlocked feature capabilities. This mess sets the stage for a smarter conversation about architecture. Pairing agent-first small-to-medium sized local models with existing frontier models can give us the best of both worlds, while avoiding ballooning costs, client data risks, and the uncanny valley of AI that is just-about-good-enough-but-not-quite, and too general to really be useful. Advisory firms that understand this will see the biggest productivity uplift since the advent of PowerPoint. The ones that do not, will likely be unable to compete.

Stack One: General Models

General models, the frontier LLMs, are the obvious stars: GPT-5 (OpenAI, August 2025), Claude Sonnet 4.5 (Anthropic, September 2025), Gemini 2.5 Pro (Google, updated September 2025), and Llama 4 Maverick (Meta, April 2025). These beasts boast 70 billion to over a trillion parameters. They thrive on vast pre-training. This enables emergent abilities like multi-step reasoning and multimodal synthesis.

Their strength isn’t just scale. It is in handling ambiguity. GPT-5, with a 128K-token context, scores 60%+ on GPQA, chaining thoughts to simulate executive decisions. Claude Sonnet 4.5 shines in coding and agent-building. It reduces harmful outputs by 40% over predecessors via constitutional AI. It hits 88% on HumanEval coding benchmarks. Gemini 2.5 Pro’s 2M-token window ingests entire codebases or reports. It blends vision for chart analysis at 85% MMMU accuracy. Llama 4 Maverick is self-hostable. It matches proprietary rivals on math (82% MATH benchmark) while allowing custom safeguards. The irony is not lost on the author: we just called out the lack of real-world ways to showcase frontier models without esoteric benchmarks, then dove right into them. this should hopefully prove my diminishing tangible returns point.

But hidden weaknesses lurk: beyond obvious costs ($0.50-$2.00 per million tokens), latency masks deeper issues. 2-10 seconds per query disrupts flow states. Hallucinations, at 10-20% error rates, erode compound over time; Hallucinations not caught beget new hallucinations. This fosters “AI fatigue” in users. Privacy? Cloud dependency creates data moats for providers. For firms, it is a vulnerability. Unseen correlations in logged queries could leak competitive intel.

Stack Two: Small Language Models

SLMs flip the script. They punch above their weight. Defined as <20B parameters, exemplars include Phi-3.5 (Microsoft, 3.8B, 2025), Gemma 2 (Google, 9B, mid-2025), Mistral Nemo (12B, 2025), and Llama 3.1-8B (Meta); I would as well include the larger Llama models in this category as they can be run on-prem, if one has the kit. NVIDIA’s Nemotron Nano-9B-v2 (9B parameters, August 2025). these outperform similar-sized models in reasoning, coding, and instruction following with 6x higher throughput. It is optimized for agentic workloads on a single GPU. Running a Llama 3.1B SLM can be 10x to 30x cheaper than its 405B sibling, depending on query details. Distilled from larger kin, they run on commodity hardware. They prioritize inference efficiency. With no API call, or chance data leak.

Their edge: not just speed (sub-100ms, 200+ tokens/sec), but adaptability. Phi-3.5 rivals 70B models on niche tasks post-fine-tuning. It scores 78% MMLU at 1/10th energy. Gemma 2, quantized to 4 bits, fits mobiles. It aces 75% GSM8K math. Nemo tunes for domains like legal. It hits 85% specialized QA. Llama 3.1-8B, with <5GB memory, maintains 70% commonsense via efficient architectures.

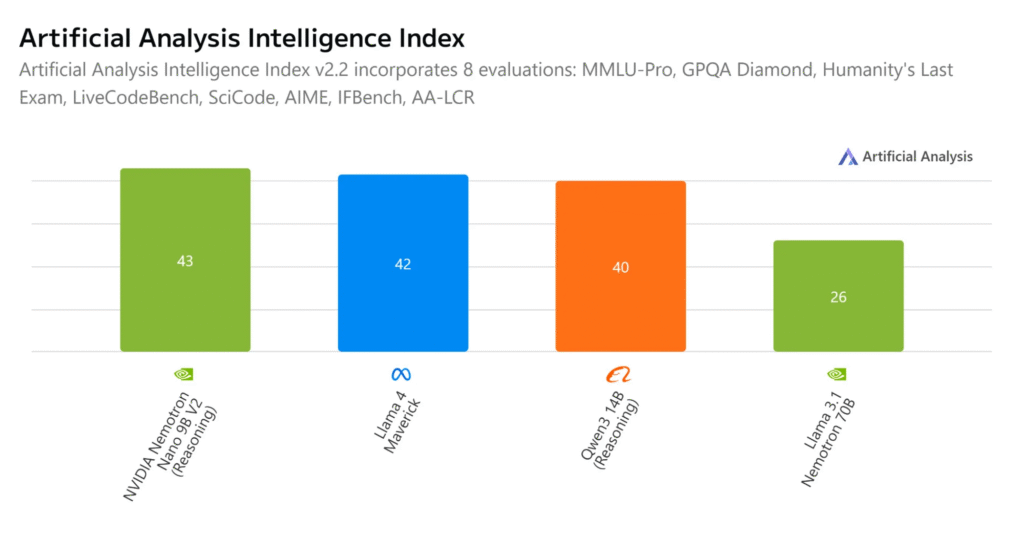

Figure 1. Artificial Analysis Intelligence Index chart comparing Nano 9B V2 to Llama 4 Maverick, Qwen 3 14B, and Llama 3.1 Nemotron 70B

Benefits extend: privacy via local runs prevents “data gravity” pulls to clouds. Costs dip to $0.01-0.05/M tokens. Domain mastery emerges from fine-tuning on proprietary data. This creates “firm-specific brains.” We are not long until advisory-specific problem solving is adapted to on-prem models. Soon, the smartest consultant on the project will likely not be the human

Examples: Phi-3 in laptops for offline devs; Apple’s iOS 19 models handle 80% Siri locally; though Apple definitely has catching up to do. In firms, deployments like banks’ edge fraud detection with Gemma reveal 50% faster responses than cloud LLMs.

Adoption: 2025 AI Index notes SLMs match 2023 LLMs at 10x lower cost. This fuels 50% pilots. Drawback: without distillation horsepower of the frontier models, they lack emergent creativity and ability to generate “original” ideas. Bifurcation of uses. Frontiers models: ideation; SLMs: execution.

Speculation: GPU development, especially vRAM expansion, will outpace real-world gains from frontier models. This means that firms will have access to rack-runnable increasingly larger local models. SLMs will turn to mid-sized models as GPU vRAM capacity extends from dozens of gigs to hundreds of gigs, or even a T. This will unlock firm and task specific models that rival current frontier models in capabilities.

The Two-Stack Architecture

Two-stacks aren’t additive, they are multiplicative. General models explore chaos. They abstract patterns. SLMs execute precisely. They are grounded in reality. One cannot say they are unable to hallucinate, but the are far less likely. Particularly bi-directional RAG models like Memagents running on MCPs. Guardrails (RAG, fact-checkers) mitigate hallucinations. QA/QC bots cynically review agenticlly-generated work with all the zeal of an over zealous Big Four project manager. Only major difference of course being that the bots work 24/7 without distractions or HR complaints.

Privacy: data stays local, air gapped files systems segregable by client or projects using MCP-docked Memagents. This means one can have the “Chinese fire walls” and the best of AI without compromise

The future is heterogeneous: SLMs handle the bulk of operational subtasks. LLMs are invoked selectively for their scope. Think of SLMs as the workers in a digital factory (efficient, specialized, and reliable). LLMs act as consultants called in when broad expertise is required. Enterprises equipped with these tools can build heterogeneous systems of AI models. They deploy fine-tuned SLMs for core workloads. They use LLMs for occasional multi-step strategic tasks. This approach improves results with substantially reduced power and costs.

Diagram: Query → General Stack (ideate, e.g., GPT-5) → Guardrails (vector DBs, rules) → SLM Stack (refine, secure, e.g., Phi-3.5) → Output. Loops retrain SLMs on insights.

Workflow: Consultant brainstorms with Claude 3.5, validates via Nemo, delivers compliant deck. Speedup: hours to minutes.

Zip them together: Two-stacks could birth “cognitive relays.” They evolve firms into AI-augmented organisms. Logarithmic rack-mount GPU capacity improvements will enable “firm intelligence”

| Model Type | Examples | Params | MMLU | Cost/M Tokens | Strength | Weakness |

|---|---|---|---|---|---|---|

| General | GPT-5, Claude 3.5 | 70B-1T+ | 85-92% | $0.50-2.00 | Ambiguity mastery | Subtle fatigue induction |

| SLM | Phi-3.5, Gemma 2 | 3-12B | 70-78% | $0.01-0.05 | Micro-economy enabler | Lacks emergent spark |

Technology Bottlenecks and Breakthroughs to Watch

To navigate the two-stack future, here is a house view on near-term (2025-2027) developments across key categories. These address bottlenecks like compute access and regulatory hurdles. They highlight breakthroughs enabling scalable adoption.

| Category | Bottlenecks | Breakthroughs to Watch | House View |

|---|---|---|---|

| Hardware | Prohibitive costs for on-prem GPUs mirror early PC terminals relying on central servers; current hyperscalers dominate via GPU-as-a-service. | Commercial/consumer GPUs dropping in price with rising performance; rapid vRAM growth (e.g., 48GB+ cards) for hosting SLMs locally. Add ARM-based chips (Qualcomm/Apple) for efficient edge devices, quantum hybrids by 2028. | Like the PC revolution, SLMs democratize AI from centralized to personal/enterprise local setups, shifting from “time-sharing” clouds to affordable in-house power. |

| Software | Many SLMs are quantized/distilled from LLMs, limiting native collaboration in two-stacks. | Task-specific, purpose-built SLMs designed for modular teamwork (e.g., NVIDIA NeMo frameworks for agentic customization); open-source tools for seamless LLM-SLM orchestration. | Evolving from general-purpose to collaborative ecosystems, watch for “SLM swarms” optimizing two-stack efficiency beyond current hybrids. |

| Bandwidth | Token/context limits bottleneck large datasets; API calls exacerbate latency/costs. | On-prem shifts eliminate cloud limits; bidirectional RAG enables “infinite” contexts via local storage I/O; 5G/6G for hybrid edge sync. | As two-stacks go local, bandwidth pivots from API constraints to internal hardware speed, unlocking persistent contexts without external chokepoints. |

| Legislation | US first-mover lead risks fragmentation from state-level AI safety laws (e.g., CA/NY bills), creating 50-jurisdiction chaos vs. unified EU AI Act. | Federal US bills for national standards; global harmonization efforts. Ironically, unified EU regs could outpace fragmented US. | Well-intentioned policies may slow US dominance, echoing pre-EU Europe’s lag in ’90s computing; watch for irony where EU’s cohesion challenges America’s at-scale market edge. |

Future Outlook as Conclusion

Looking ahead to 2030, hardware capabilities and costs will plummet, enabling models once confined to data centers to run on-prem. Much like RAM/storage evolved from single-digit gigs/megs to exponential capacity in rack-mount servers. SLMs will rival today’s LLMs on wearables, while frontiers grow pricier for rarified tasks. Agents will orchestrate swarms, giving rise to “firm intelligences” that adapt dynamically. A single agent can blend multiple specialized SLMs with occasional LLM calls, using a modular approach where the right-sized model tackles the right subtask. Mirroring how agents break down problems. McKinsey forecasts 75% adoption by 2027, with power shifting to SLM tuners, upending Big AI and empowering performant, customized agents.

All views shared here are solely those of the author; and not even he is completely sure if they are right. Enjoy the read and the ride